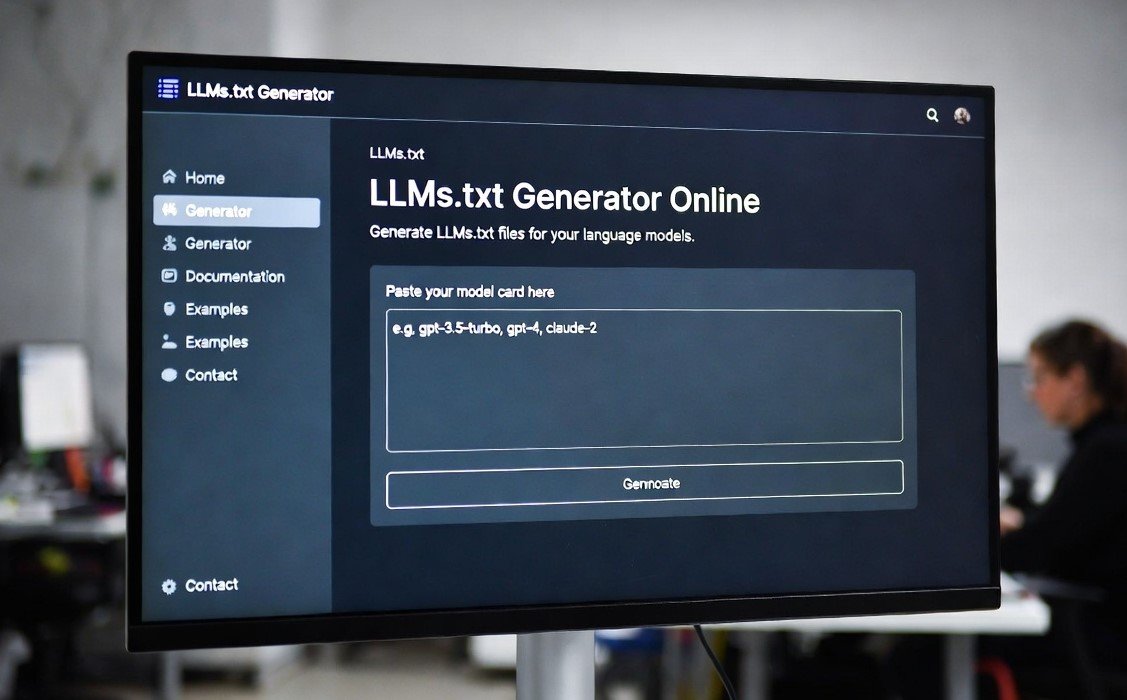

LLMs.txt Generator Online

Create a custom `llms.txt` file to control which AI models can use your website's content.

Step 1: Set Permissions

Step 2: Get Your Code

What is llms.txt and Why Do You Need It?

In the rapidly evolving world of artificial intelligence, your website's content has become a valuable resource for training Large Language Models (LLMs) like ChatGPT, Google's Bard, and others. While this technological advancement is exciting, it also raises important questions about content ownership and usage rights. The llms.txt file is a simple yet powerful tool that puts you back in control.

Think of it as a digital "No Trespassing" sign specifically for AI training bots. Similar to how robots.txt gives instructions to search engine crawlers, llms.txt provides clear directives to AI crawlers, telling them whether they have your permission to use your website’s text, images, and data for training their models. By implementing this file, you can proactively protect your intellectual property, prevent unauthorized use of your unique content, and ensure your hard work isn't used to build commercial AI products without your consent.

How to Use Your Generated llms.txt File

Using the code generated by our tool is a straightforward, three-step process. This guide will help you get it set up on your website in just a few minutes.

- Generate Your Rules: Use the interactive tool above to set "Allow" or "Disallow" permissions for each AI bot. Once you are satisfied with your configuration, you have two options to get the code.

- Copy or Download: You can either click the "Copy to Clipboard" button to copy the text directly, or click the "Download File" button. This will save a ready-to-use file named

llms.txtto your computer. - Upload to Your Website: The final and most important step is to upload this

llms.txtfile to the **root directory** of your website. This is the main folder of your site, often named `public_html` or `www`. You can do this using an FTP client like FileZilla or through the File Manager in your web hosting control panel (like cPanel or Plesk). Once uploaded, you should be able to access it athttps://yourwebsite.com/llms.txtto verify it's working correctly.